A Tesla vehicle on the latest Full Self-Driving (FSD) Supervised update suddenly veered off road and flipped the car upside down – creating a scary crash that the driver said he couldn’t prevent.

We have seen many crashes involving Tesla’s Supervised FSD over the years, but the vast majority of them have a major contributing factor in common: the driver is not paying attention or is not ready to take control.

A common crash scenario with Tesla FSD is that the vehicle doesn’t see an obstacle on the road, like a vehicle, and crashes into it, even though the driver would have had time to react if they were paying enough attention.

Despite its name, Full Self-Driving (FSD) is still considered a level 2 driver assist system and is not fully self-driving. It requires drivers to stay attentive at all times and for them to be ready to take control – hence while Tesla has more recently added ‘Supervised’ to the name.

According to Tesla, the driver is always responsible in a crash, even if FSD is activated.

The automaker has implemented driver monitoring systems to ensure drivers’ attention, but it is gradually relaxing those.

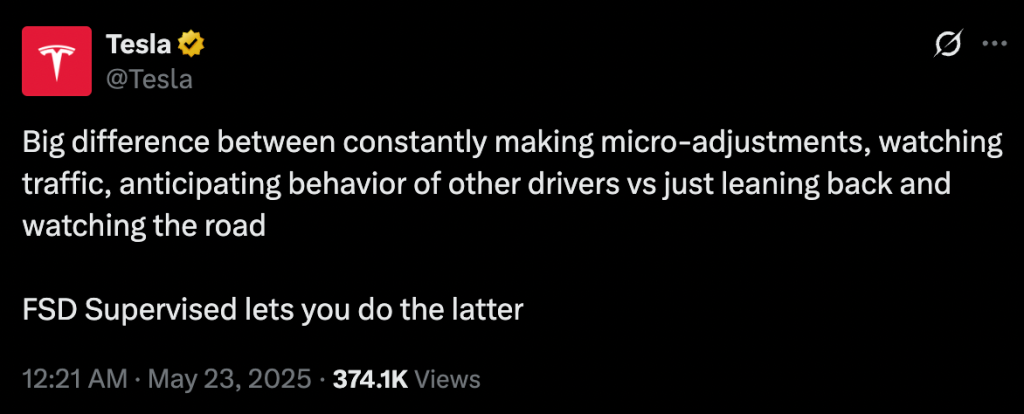

Just today, Tesla released a post on X in which it said drivers just have to “lean back and watch the road” when using FSD:

Sitting back and watching the road was exactly what Wally, a Tesla driver in Alabama, was doing when his car suddenly veered off the road in Toney, Alabama, earlier this year.

Wally leased a brand new 2025 Tesla Model 3 with FSD and understood that he needed to pay attention. When talking with Electrek yesterday, he said that he would regularly use the feature:

I used FSD every chance I could get I actually watched YouTube videos to tailor my FSD settings and experience. I was happy it could drive me to Waffle House and I could just sit back and relax while it would drive me on my morning commute to work.

Two months ago, he was driving to work on Tesla Full Self-Driving when his car suddenly swerved off the road. He shared the Tesla camera video of the crash:

Wally told Electrek that he didn’t have time to react even though he was paying attention:

I was driving to work had Full Self-Driving on. The oncoming car passed, and the wheel started turning rapidly, driving into the ditch, and side-swiping the tree, and the car flipped over. I did not have any time to react.

The car ended up flipping upside down from the crash:

Fortunately, Wally only suffered a relatively small chin injury from the accident, but it was a scary experience:

My chin split open, and I had to get 7 stitches. After the impact, I was hanging upside down watching blood drip down to the glass sun roof, not knowing where I was bleeding from. I unbuckled my seatbelt and sat on the fabric interior in the middle of the two front seats, and saw that my phone’s crash detection went off and told me the first responders were on their way. My whole body was in shock from the incident.

The Tesla driver said that one of the neighbors came out of their house to make sure he was okay and the local Firefighters arrived to get him out of the upside-down Model 3.

Wally said he was on Tesla FSD v13.2.8 on Hardware 4, Tesla’s latest FSD technology. He requested that Tesla send him the data from his car to better understand what happened.

Electrek’s Take

This is where Tesla FSD gets really scary. I get that Tesla admits that FSD can make mistakes at the worst possible moment and that the driver needs to pay attention at all times.

The idea is that if you pay attention, you can correct those mistakes, which is true most of the time, but not always.

In this case, the driver had less than a second to react, and even if he had reacted, it might have made things worse, like correcting, but not enough to get back on the road and hit the tree head-on instead.

In cases like this one, it’s hard to put the blame on the driver. He was doing exactly what Tesla says you should do: “lean back and watch the road.”

A very similar thing happened to me last year when my Model 3 on FSD veered to the left, trying to take an emergency exit on the highway for no reason. I was able to take control in time, but it created a dangerous situation as I almost overcorrected into a vehicle in the right lane.

In Wally’s case, it’s unclear what happened. It’s possible that FSD believed it was about to hit something because of the shadows on the ground. Here’s the view from the front-facing camera, a fraction of a second before FSD veered to the left:

But it’s just speculation at this time.

Either way, I think Tesla has a problem with complacency with FSD where its drivers are starting to pay less attention on FSD – leading to some crashes, but there are also these even scarier crashes that appear to be 100% caused by FSD with very little to no opportunity for the drivers to prevent them.

That’s even scarier.